Difference between HIGH AVAILABILITY and DISASTER RECOVERY

Its many time seen, so many people often confused or thought High Availability and Disaster Recovery (DR) are the same thing. A high-availability solution does not mean that you are prepared for a disaster. High availability covers hardware or system-related failures, whereas disaster recovery can be used in the event of a catastrophic failure due to environmental factors. Although some of the high-availability options may help us when designing our DR strategy, they are not the be-all and end-all solution.

The goal of high availability is to provide an uninterrupted user experience with zero data loss. According to Microsoft’s SQL Server Books Online, “A high-availability solution masks the effects of a hardware or software failure and maintains the availability of applications so that the perceived downtime for users is minimized”.

Disaster recovery is generally a part of a larger process known as Business Continuity Planning (BCP), which plans for any IT or non IT eventuality. A server disaster recovery plan helps in undertaking proper preventive, detective and corrective measures to mitigate any server related disaster. Here is the very good book “The Shortcut Guide To Untangling the Differences Between High Availability and Disaster Recovery” by Richard Siddaway for more details. As per my understanding below explanation is the best and quickest way to remember this is:

High Availability is @ LAN and Disaster Recovery is @ WAN

J

J

Temporary Stored Procedures

Posted: June 28, 2013 in Database AdministratorTags: # procedure, SQL Server Temporary procedure, SQL Server temporary staored procedure, SQL SERVER Temporary Stored Procedures, Stored procedure in tempdb, Temporary stored procedures

Temporary stored procedures are like normal stored procedures but,

as their name suggests, have short-term existence. There are two types

of temporary stored procedures as Private and global are analogous to

temporary tables, can be created with the # and ## prefixes added to the

procedure name. The symbol # denotes a local temporary stored procedure

while ## denotes a global temporary stored procedure. These procedures

do not exist after SQL Server is shut down.

Temporary stored procedures are useful when connecting to earlier versions of SQL Server that do not support the reuse of execution plans for Transact-SQL statements or batches. Any connection can execute a global temporary stored procedure. A global temporary stored procedure exists until the connection used by the user who created the procedure is closed and any currently executing versions of the procedure by any other connections are completed. Once the connection that was used to create the procedure is closed, no further execution of the global temporary stored procedure is allowed. Only those connections that have already started executing the stored procedure are allowed to complete. If a stored procedure not prefixed with # or ## is created directly in the tempdb database, the stored procedure is automatically deleted when SQL Server is shut down because tempdb is re-created every time SQL Server is started. Procedures created directly in tempdb exist even after the creating connection is terminated.

Temporary stored procedures are useful when connecting to earlier versions of SQL Server that do not support the reuse of execution plans for Transact-SQL statements or batches. Any connection can execute a global temporary stored procedure. A global temporary stored procedure exists until the connection used by the user who created the procedure is closed and any currently executing versions of the procedure by any other connections are completed. Once the connection that was used to create the procedure is closed, no further execution of the global temporary stored procedure is allowed. Only those connections that have already started executing the stored procedure are allowed to complete. If a stored procedure not prefixed with # or ## is created directly in the tempdb database, the stored procedure is automatically deleted when SQL Server is shut down because tempdb is re-created every time SQL Server is started. Procedures created directly in tempdb exist even after the creating connection is terminated.

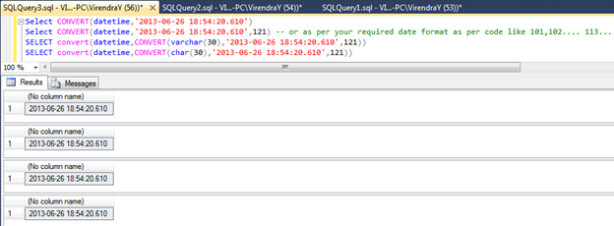

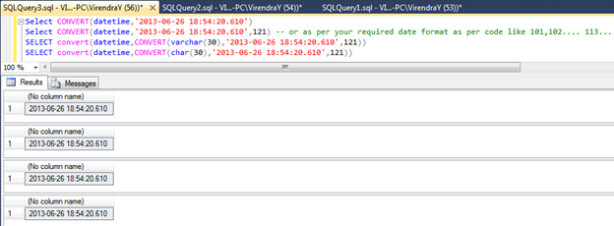

Converting Milliseconds/Nanoseconds date string values to Date/Datetime

Posted: June 27, 2013 in Database AdministratorTags: "Msg 241, Conversion failed when converting date and/or time from character string, Converting Date string values's miliseconds to Date/Datetime, Converting Milliseconds date string values to Date/Datetime, Level 16, Line 1, Msg 241 Level 16 State 1 Line 1, State 1

Data type conversion is very frequent and common practices with data

to manipulate or reproduce data. As we regularly use conversion

functions CONVERT and CAST in our daily routine.

Sometime its very needed to convert any datetime value to string or Date string to Datetime.

Let see example below for Datetime to character string conversion

Suppose if it required to convert a date string to date/Datetime, usually we use

But now if milliseconds part is more than 3 digits, it will give error message as

Msg 241, Level 16, State 1, Line 1

Conversion failed when converting date and/or time from character string.

To resolve this, new data type DATETIME2 introduced from SQL Server 2008 onwards and beauty of DATETIME2 is that its supports fractional precision upto 7 digit. Let See examples

Sometime its very needed to convert any datetime value to string or Date string to Datetime.

Let see example below for Datetime to character string conversion

Suppose if it required to convert a date string to date/Datetime, usually we use

But now if milliseconds part is more than 3 digits, it will give error message as

Msg 241, Level 16, State 1, Line 1

Conversion failed when converting date and/or time from character string.

To resolve this, new data type DATETIME2 introduced from SQL Server 2008 onwards and beauty of DATETIME2 is that its supports fractional precision upto 7 digit. Let See examples

SQL Server 2014 – Code Name : Hekaton

Posted: June 26, 2013 in Database AdministratorTags: aspirational goal, brent ozar, Column Store, ColumnStore, data warehousing and business intelligence, Hekaton, In-memory OLTP, software, SQL Codename - Hekaton, SQL Server 2014, SQL Server 2014 Features, SSD, technology, Whats new in SQL Server 2014, XVelocity column

SQL Server 2014 CTP1 released on 26-Jun-2013, highly focused on data

warehousing and business intelligence (BI) enhancements made possible

through new in-memory capabilities built in to the core Relational

Database Management System (RDBMS). As memory prices have fallen

dramatically, 64-bit architectures have become more common and usage of

multicore servers has increased, Microsoft has sought to tailor SQL

Server to take advantage of these trends.

Hekaton is a Greek term for “factor of 100.” The aspirational goal of the team was to see 100 times performance acceleration levels. Hekaton also is a giant mythical creature, as well as a Dominican thrash-metal band, for what it’s worth.

In-Memory OLTP (formally known as code name “Hekaton”) is a new database engine component, fully integrated into SQL Server. It is optimized for OLTP workloads accessing memory resident data. In-Memory OLTP allows OLTP workloads to achieve remarkable improvements in performance and reduction in processing time. Tables can be declared as ‘memory optimized’ to take advantage of In-Memory OLTP’s capabilities. In-Memory OLTP tables are fully transactional and can be accessed using Transact-SQL. Transact-SQL stored procedures can be compiled into machine code for further performance improvements if all the tables referenced are In-Memory OLTP tables. The engine is designed for high concurrency and blocking is minimal. “Memory-optimized tables are stored completely differently than disk-based tables and these new data structures allow the data to be accessed and processed much more efficiently”

New buffer pool extension support to non-volatile memory such as solid state drives (SSDs) will increase performance by extending SQL Server in-memory buffer pool to SSDs for faster paging.

Here is very good explanation from Mr. Brent Ozar’s article on this ( http://www.brentozar.com/archive/2013/06/almost-everything-you-need-to-know-about-the-next-version-of-sql-server/ )

The New Feature xVelocity ColumnStore provides in-memory capabilities for data warehousing workloads that result in dramatic improvement for query performance, load speed, and scan rate, while significantly reducing resource utilization (i.e., I/O, disk and memory footprint). The new ColumnStore complements the existing xVelocity ColumnStore Index, providing higher compression, richer query support and updateability of the ColumnStore giving us the even faster load speed, query performance, concurrency and even lower price per terabyte.

Extending Memory to SSDs: Seamlessly and transparently integrates solid-state storage into SQL Server by using SSDs as an extension to the database buffer pool, allowing more in-memory processing and reducing disk IO.

Enhanced High Availability : New AlwaysOn features availability Groups now support up to 8 secondary replicas that remain available for reads at all times, even in the presence of network failures. Failover Cluster Instances now support Windows Cluster Shared Volumes, improving the utilization of shared storage and increasing failover resiliency. Finally, various supportability enhancements make AlwaysOn easier to use.

Improved Online Database Operations: includes single partition online index rebuild and managing lock priority for table partition switch, greatly increasing enterprise application availability by reducing maintenance downtime impact.

For more details please refer SQL_Server_Hekaton_CTP1_White_Paper and for SQL Server 2014 CTP1 software click on http://technet.microsoft.com/en-US/evalcenter/dn205290.aspx (The Microsoft SQL Server 2014 CTP1 release is only available in the X64 architecture.)

I appreciate your time, keep posting your comment. I will be very happy to review and reply to your comments/Questions/Doubts as soon as I can.

Hekaton is a Greek term for “factor of 100.” The aspirational goal of the team was to see 100 times performance acceleration levels. Hekaton also is a giant mythical creature, as well as a Dominican thrash-metal band, for what it’s worth.

In-Memory OLTP (formally known as code name “Hekaton”) is a new database engine component, fully integrated into SQL Server. It is optimized for OLTP workloads accessing memory resident data. In-Memory OLTP allows OLTP workloads to achieve remarkable improvements in performance and reduction in processing time. Tables can be declared as ‘memory optimized’ to take advantage of In-Memory OLTP’s capabilities. In-Memory OLTP tables are fully transactional and can be accessed using Transact-SQL. Transact-SQL stored procedures can be compiled into machine code for further performance improvements if all the tables referenced are In-Memory OLTP tables. The engine is designed for high concurrency and blocking is minimal. “Memory-optimized tables are stored completely differently than disk-based tables and these new data structures allow the data to be accessed and processed much more efficiently”

New buffer pool extension support to non-volatile memory such as solid state drives (SSDs) will increase performance by extending SQL Server in-memory buffer pool to SSDs for faster paging.

Here is very good explanation from Mr. Brent Ozar’s article on this ( http://www.brentozar.com/archive/2013/06/almost-everything-you-need-to-know-about-the-next-version-of-sql-server/ )

The New Feature xVelocity ColumnStore provides in-memory capabilities for data warehousing workloads that result in dramatic improvement for query performance, load speed, and scan rate, while significantly reducing resource utilization (i.e., I/O, disk and memory footprint). The new ColumnStore complements the existing xVelocity ColumnStore Index, providing higher compression, richer query support and updateability of the ColumnStore giving us the even faster load speed, query performance, concurrency and even lower price per terabyte.

Extending Memory to SSDs: Seamlessly and transparently integrates solid-state storage into SQL Server by using SSDs as an extension to the database buffer pool, allowing more in-memory processing and reducing disk IO.

Enhanced High Availability : New AlwaysOn features availability Groups now support up to 8 secondary replicas that remain available for reads at all times, even in the presence of network failures. Failover Cluster Instances now support Windows Cluster Shared Volumes, improving the utilization of shared storage and increasing failover resiliency. Finally, various supportability enhancements make AlwaysOn easier to use.

Improved Online Database Operations: includes single partition online index rebuild and managing lock priority for table partition switch, greatly increasing enterprise application availability by reducing maintenance downtime impact.

For more details please refer SQL_Server_Hekaton_CTP1_White_Paper and for SQL Server 2014 CTP1 software click on http://technet.microsoft.com/en-US/evalcenter/dn205290.aspx (The Microsoft SQL Server 2014 CTP1 release is only available in the X64 architecture.)

I appreciate your time, keep posting your comment. I will be very happy to review and reply to your comments/Questions/Doubts as soon as I can.

Checkpoint

Posted: June 24, 2013 in Database AdministratorTags: Checkpoint, Database Checkpoint, dirty pages, dirty read/write, SQL Server Checkpoint, when checkpoint happend, when checkpoint occure, why checkpoint in sql server

CHECKPOINT : As per MSDN/BOL , A checkpoint writes the current in-memory modified pages (known as dirty pages)

and transaction log information from memory to disk and, also, records

information about the transaction log because For performance reasons,

the Database Engine performs modifications to database pages in

memory—in the buffer cache—and does not write these pages to disk after

every changes made. Checkpoint

is the SQL engine system process that writes all dirty pages to disk

for the current database. The benefit of the Checkpoint process is to

minimize time during a later recovery by creating a point where all

dirty pages have been written to disk.

When CHECKPOINT happen?

When CHECKPOINT happen?

- A CHECKPOINT statement is explicitly executed. A checkpoint occurs in the current database for the connection.

- A minimally logged

operation is performed in the database; for example, a bulk-copy

operation is performed on a database that is using the Bulk-Logged

recovery model.

- Database files have been added or removed by using ALTER DATABASE.

- An instance of SQL

Server periodically generates automatic checkpoints in each database to

reduce the time that the instance would take to recover the database.

- A database backup is

taken. Before a backup, the database engine performs a checkpoint, in

order that all the changes to database pages (dirty pages) are contained

in the backup.

-

Stopping the server using any of the following methods, they it cause a checkpoint.

-

Using Shutdown statement,

-

Stopping SQL Server service through SQL Server configuration, SSMS, net stop mssqlserver and ControlPanel-> Services -> SQL Server Service.

-

When the “SHUTDOWN WITH NOWAIT” is used, it does not execute checkpoint on the database.

-

-

When the recovery internal server configuration is accomplished. This is when the active portion of logs exceeds the size that the server could recover in amount of time defined on the server configuration (recovery internal).

-

When the transaction log is 70% full and the database is in truncation mode.

-

The database is in truncation mode, when is in simple recovery model and after a backup statement has been executed.

-

An activity requiring a database shutdown is performed. For example, AUTO_CLOSE is ON and the last user connection to the database is closed, or a database option change is made that requires a restart of the database.

-

Long-running uncommitted transactions increase recovery time for all types of checkpoints.

The time required to perform a checkpoint depends directly of the amount of dirty pages that the checkpoint must write.

We

can monitor checkpoint I/O activity using Performance Monitor by

looking at the “Checkpoint pages/sec” counter in the SQL Server:Buffer

Manager object.Tracking data/log file size increment for a database

Posted: June 24, 2013 in Database AdministratorTags: backupfile, backupset, Data file growth hi History, DataBase Growth History, DB Growth History, Log file growth history, SQL Server Database Size growth, SQL Server Database Size increment, Tracking data/log file size increment for a database

Here is a script to find out database’s file (either Data file-MDF

or Log File – LDF ) growth details. To find this we can use MSDB’s two

tables, one is backupfile table which Contains one row for each data or

log file of a database and secondone is backupset table which Contains a row for each backup set.

Script is as below.

SELECT BS.database_name

,BF.logical_name

,file_type

,BF.file_size/(1024*1024) FileSize_MB

,BS.backup_finish_date

,BF.physical_name

FROM msdb.dbo.backupfile BF

INNER JOIN msdb.dbo.backupset BS ON BS.backup_set_id = BF.backup_set_id

AND BS.database_name = ‘VirendraTest’ – Database Name

WHERE logical_name = VirendraTest’ – DB’s Logical Name

ORDER BY BS.backup_finish_date DESC

Script is as below.

SELECT BS.database_name

,BF.logical_name

,file_type

,BF.file_size/(1024*1024) FileSize_MB

,BS.backup_finish_date

,BF.physical_name

FROM msdb.dbo.backupfile BF

INNER JOIN msdb.dbo.backupset BS ON BS.backup_set_id = BF.backup_set_id

AND BS.database_name = ‘VirendraTest’ – Database Name

WHERE logical_name = VirendraTest’ – DB’s Logical Name

ORDER BY BS.backup_finish_date DESC

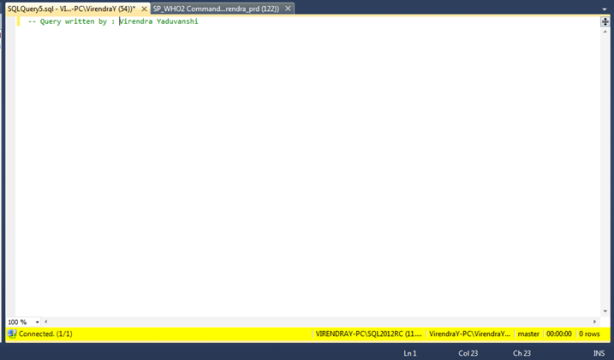

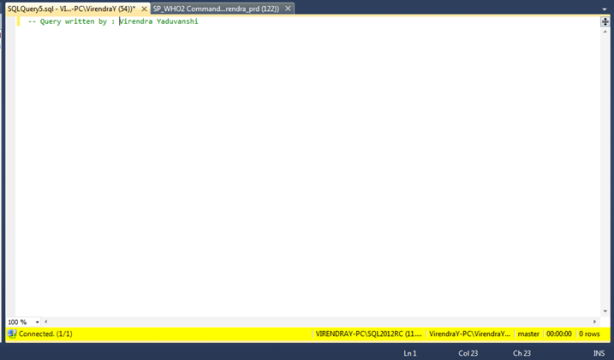

SQL Server Management Studio Tip: Get a Customized New Query Window

Posted: June 10, 2013 in Database AdministratorTags: Customized new query windows, Customizing SSMS, Modified new query windows, SQL Server's SSMS new query windows, SSMS new query windows, SSMS tips

Here is a SSMS tips where we can customized our new query windows as,

For SQL Server 2008 (64 Bits)

1) Find the file SQLFile.SQL , which mostly located at C:\Program Files (x86)\Microsoft SQL Server\100\Tools\Binn\VSShell\Common7\IDE\SqlWorkbenchProjectItems\Sql\SQLFILE.SQL

and modify the file as per your wish.

For SQL Server 2008 (32 Bits)

1) Find the file SQLFile.SQL , which mostly located at C:\Program Files\Microsoft SQL Server\100\Tools\Binn\VSShell\Common7\IDE\SqlWorkbenchProjectItems\Sql\SQLFILE.SQL

and modify the file as per your wish.

For SQL Server 2012 (64 Bits)

1) Find the file SQLFile.SQL , which mostly located at C:\Program Files\Microsoft SQL Server\110\Tools\Binn\ManagementStudio\SqlWorkbenchProjectItems\Sql\SQLFile.sql

and modify the file as per your wish.

For SQL Server 2012 (32 Bits)

1) Find the file SQLFile.SQL , which mostly located at C:\Program Files\Microsoft SQL Server\110\Tools\Binn\ManagementStudio\SqlWorkbenchProjectItems\Sql\SQLFile.sql

and modify the file as per your wish.

Let See my Modified SQLFILE.SQL file as

Source : Mr. Amit Bansal’s Blog

For SQL Server 2008 (64 Bits)

1) Find the file SQLFile.SQL , which mostly located at C:\Program Files (x86)\Microsoft SQL Server\100\Tools\Binn\VSShell\Common7\IDE\SqlWorkbenchProjectItems\Sql\SQLFILE.SQL

and modify the file as per your wish.

For SQL Server 2008 (32 Bits)

1) Find the file SQLFile.SQL , which mostly located at C:\Program Files\Microsoft SQL Server\100\Tools\Binn\VSShell\Common7\IDE\SqlWorkbenchProjectItems\Sql\SQLFILE.SQL

and modify the file as per your wish.

For SQL Server 2012 (64 Bits)

1) Find the file SQLFile.SQL , which mostly located at C:\Program Files\Microsoft SQL Server\110\Tools\Binn\ManagementStudio\SqlWorkbenchProjectItems\Sql\SQLFile.sql

and modify the file as per your wish.

For SQL Server 2012 (32 Bits)

1) Find the file SQLFile.SQL , which mostly located at C:\Program Files\Microsoft SQL Server\110\Tools\Binn\ManagementStudio\SqlWorkbenchProjectItems\Sql\SQLFile.sql

and modify the file as per your wish.

Let See my Modified SQLFILE.SQL file as

Source : Mr. Amit Bansal’s Blog

SSRS Tutorial – Part 1

Posted: June 10, 2013 in Database AdministratorTags: SSRS, SSRS Tutorial, SSRS Tutorial Part 1, Step by step SSRS, Step by Step SQL Server Reporting services, SSRS Training

Hi,

Here is the SSRS tutorial. SSRS Tutorial – Part 1 ( Click here to download or Right Click -> Save target as..)

Thanks for various resources available on internet & BOL.

Please share your views on this.

Here is the SSRS tutorial. SSRS Tutorial – Part 1 ( Click here to download or Right Click -> Save target as..)

Thanks for various resources available on internet & BOL.

Please share your views on this.

Finding SQL Server Job details having no operator for notification

Posted: May 7, 2013 in Database AdministratorTags: Finding SQL Server Job details having no operator for notification, Job details, job notification, Job Operator, Operator, SQL server job, SQL Server notification details, Sysjob, Sysoperator

As a DBA, sometimes it’s may be happened you forgot to set a

notification to a job, here is a very simple script to find out which

job have notification or not.

Select SJ.Name Job_Name,

Case SJ.Enabled when 1 then ‘Enabled’ else ‘Disabled’ end Enable_Status,

SJ.description Job_Description,

SUSER_SNAME(owner_sid) Job_Owner,

SJ.date_created Job_Created_Date,

SJ.date_modified Job_Modified_Date,

SP.Name Operator_Name,

SP.email_address Emails,

SP.last_email_date Last_Email_Date,

SP.last_email_time Last_Email_Time

from msdb..sysjobs SJ

LEFT JOIN msdb..sysoperators SP on SP.ID = SJ.notify_email_operator_id

Select SJ.Name Job_Name,

Case SJ.Enabled when 1 then ‘Enabled’ else ‘Disabled’ end Enable_Status,

SJ.description Job_Description,

SUSER_SNAME(owner_sid) Job_Owner,

SJ.date_created Job_Created_Date,

SJ.date_modified Job_Modified_Date,

SP.Name Operator_Name,

SP.email_address Emails,

SP.last_email_date Last_Email_Date,

SP.last_email_time Last_Email_Time

from msdb..sysjobs SJ

LEFT JOIN msdb..sysoperators SP on SP.ID = SJ.notify_email_operator_id

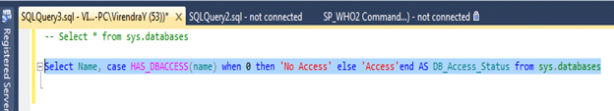

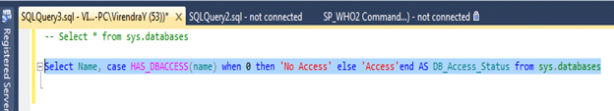

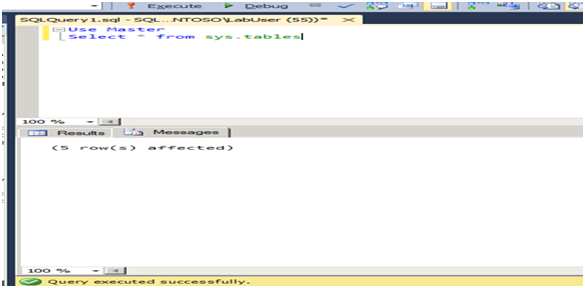

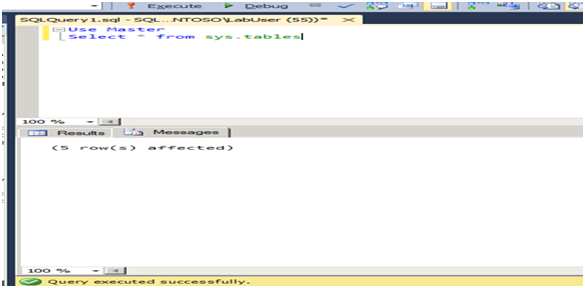

Finding Database Access details for currently logged user

Posted: May 6, 2013 in Database AdministratorTags: Currently logged user db details, Finding DB Access details, Finding DB User, How to know Database Access Details, SQL Server Database Access Details

Sometimes it’s happened, a developer try to access a database but

he/she could not get succeed and after few R&D he/she came to know

that he/she has no access right for that particular DB. For same, a very

useful SQL Server’s function HAS_DBACCESS can

be used to get the list of all Databases having access details of

currently logged user. Here is a simple script to get the details as

Select Name, case HAS_DBACCESS(name) when 0 then ‘No Access’ else ‘Access’end AS DB_Access_Status from sys.databases

Note : if any Database is in RECOVERY Mode, it will shows as NO Access for that DB.

Select Name, case HAS_DBACCESS(name) when 0 then ‘No Access’ else ‘Access’end AS DB_Access_Status from sys.databases

Note : if any Database is in RECOVERY Mode, it will shows as NO Access for that DB.

SQL Server’s Error: System assertion check has failed

Posted: January 16, 2013 in Database AdministratorTags: A system assertion check has failed. Check the SQL Server error log for details. Typically- an assertion failure is caused by a software bug or data corruption. To check for database corruption- consi, assertion, DBCC CHECKDB, Level 20, Line 1, Msg 3624, SQL Serve Error : System assertion check has failed, SQL Server’s Error : System assertion check has failed, State 1, System assertion check has failed

Yesterday my one team guy came to me with an error as

SPID: XX

Process ID: XXXX

Msg 3624, Level 20, State 1, Line 1

A system assertion check has failed. Check the SQL Server error log for details. Typically, an assertion failure is caused by a software bug or data corruption. To check for database corruption, consider running DBCC CHECKDB. If you agreed to send dumps to Microsoft during setup, a mini dump will be sent to Microsoft. An update might be available from Microsoft in the latest Service Pack or in a QFE from Technical Support.

When we checked DBCC CHECKDB, results were as

DBCC CHECKDB WITH NO_INFOMSGS – reported no problems.

DBCC CHECKDB, report 0 errors, 0 consistency errors.

After analyzing queries which he was using, we came to know there was 4-5 joins were used and in two tables a comparing columns was different data types as INT and BIGINT. After changing INT to BIGINT our problem got resolved.

SPID: XX

Process ID: XXXX

Msg 3624, Level 20, State 1, Line 1

A system assertion check has failed. Check the SQL Server error log for details. Typically, an assertion failure is caused by a software bug or data corruption. To check for database corruption, consider running DBCC CHECKDB. If you agreed to send dumps to Microsoft during setup, a mini dump will be sent to Microsoft. An update might be available from Microsoft in the latest Service Pack or in a QFE from Technical Support.

When we checked DBCC CHECKDB, results were as

DBCC CHECKDB WITH NO_INFOMSGS – reported no problems.

DBCC CHECKDB, report 0 errors, 0 consistency errors.

After analyzing queries which he was using, we came to know there was 4-5 joins were used and in two tables a comparing columns was different data types as INT and BIGINT. After changing INT to BIGINT our problem got resolved.

The WordPress.com stats helper monkeys prepared a 2012 annual report for this blog.

Here’s an excerpt:

Here’s an excerpt:

600 people reached the top of Mt. Everest in 2012. This blog got about 2,100 views in 2012. If every person who reached the top of Mt. Everest viewed this blog, it would have taken 4 years to get that many views.Click here to see the complete report.

Changing Instance Name

Posted: December 28, 2012 in Database AdministratorTags: Change sql server instance name, Changing a name of SQL SERVER instance, Changing Instance Name, How to change Instance Name, How to change SQL Server Instance Name, SQL Server Instance Name

As per my personal observation/suggestion, Its much better

reinstall server again with new name and then detached DBs from OLD

instance and Attach with NEW Instance, because a instance name is

associated so many things like performance

counters, local groups for service start and file ACLs, service names

for SQL and related (agent, full text) services, SQL browser visibility,

service master key encryption, various full-text settings, registry

keys, ‘local’ linked server etc. Although, we can change Name as

following the below steps,

— For default instance

sp_dropserver ‘old_name’

go

sp_addserver ‘new_name’,‘local’

go

– For named instance

sp_dropserver ‘Server Name\old_Instance_name’

go

sp_addserver ‘ServerName\New Instance Name’,‘local’

go

Verify sql server instance configuration by running below queries

sp_helpserver

and then restarted the SQL server with following command at command prompt J

net stop MSSQLServerServiceName

net start MSSQLServerServiceName

— For default instance

sp_dropserver ‘old_name’

go

sp_addserver ‘new_name’,‘local’

go

– For named instance

sp_dropserver ‘Server Name\old_Instance_name’

go

sp_addserver ‘ServerName\New Instance Name’,‘local’

go

Verify sql server instance configuration by running below queries

sp_helpserver

Select @@SERVERNAME

net stop MSSQLServerServiceName

net start MSSQLServerServiceName

Finding Space Used,Space left on Data and Log files

Posted: December 26, 2012 in Database AdministratorTags: Finding space availability on MDF and LDF file, Finding Space Used, MDF and LDF file space details, Space left on Data and Log files, Space left on MDF/LDF

Here is a script from which we can easily find the Spaceused on MDF and LDF files.

Select DB_NAME() as [DATABASE NAME],

fileid as FILEID,

CASE WHEN groupid = 0 then ‘LOG FILE’ else ‘DATA FILE’ END as FILE_TYPE,

Name as PHYSICAL_NAME,

Filename as PHYSICAL_PATH,

Convert(int,round((sysfiles.size*1.000)/128.000,0)) as FILE_SIZE,

Convert(int,round(fileproperty(sysfiles.name,‘SpaceUsed’)/128.000,0)) as SPACE_USED,

Convert(int,round((sysfiles.size-fileproperty(sysfiles.name,‘SpaceUsed’))/128.000,0)) as SPACE_LEFT

From sysfiles;

Select DB_NAME() as [DATABASE NAME],

fileid as FILEID,

CASE WHEN groupid = 0 then ‘LOG FILE’ else ‘DATA FILE’ END as FILE_TYPE,

Name as PHYSICAL_NAME,

Filename as PHYSICAL_PATH,

Convert(int,round((sysfiles.size*1.000)/128.000,0)) as FILE_SIZE,

Convert(int,round(fileproperty(sysfiles.name,‘SpaceUsed’)/128.000,0)) as SPACE_USED,

Convert(int,round((sysfiles.size-fileproperty(sysfiles.name,‘SpaceUsed’))/128.000,0)) as SPACE_LEFT

From sysfiles;

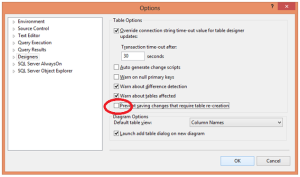

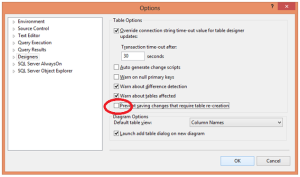

SSMS Error : Saving changes is not permitted

Posted: December 26, 2012 in Database AdministratorTags: Save (Not Permitted) Dialog Box, Saving changes is not permitted, Saving Changes Is Not Permitted On SQL Server 2008 Management Studio, Saving changes is not permitted. The changes that you have made require the following tables to be dropped and re-created. You have either made changes to a table that can't be re-created or enabled t, SQL Server Error: Saving changes is not permitted, SSMS Error, SSMS Error : Saving changes is not permitted

In case of following

Saving changes is not permitted. The changes that you have made require the following tables to be dropped and re-created. You have either made changes to a table that can’t be re-created or enabled the option Prevent saving changes that require the table to be re-created.

This message problem occurs when the Prevent saving changes that require the table re-creation option is enabled, to resolve this, follow the steps as

Click the Tools menu, click Options, expand Designers, and then click Table and Database Designers. Select or clear the Prevent saving changes that require the table to be re-created check box.

- Adding a new column to the middle of the table

- Dropping a column

- Changing column nullability

- Changing the order of the columns

- Changing the data type of a column

Saving changes is not permitted. The changes that you have made require the following tables to be dropped and re-created. You have either made changes to a table that can’t be re-created or enabled the option Prevent saving changes that require the table to be re-created.

This message problem occurs when the Prevent saving changes that require the table re-creation option is enabled, to resolve this, follow the steps as

Click the Tools menu, click Options, expand Designers, and then click Table and Database Designers. Select or clear the Prevent saving changes that require the table to be re-created check box.

Difference between @@SERVERNAME and SERVERPROPERTY(‘SERVERNAME’)

Posted: December 26, 2012 in Database AdministratorTags: @@SERVERNAME, Difference between @@SERVERNAME and SERVERPROPERTY(‘SERVERNAME’), SERVERPROPERTY, sp_addserver, sp_dropserver, sp_helpserver

Its general

understanding that @@SERVERNAME and SERVERPROPERTY(‘SERVERNAME’) will

return same values. But once I get a different values for both means

both

Select @@SERVERNAME

Select SERVERPROPERTY(‘SERVERNAME’)

were returning different name, I got answer @ BOL as

@@SERVERNAME function and the SERVERNAME property of SERVERPROPERTY function may return strings with similar formats, the information can be different. The SERVERNAME property automatically reports changes in the network name of the computer.In contrast, @@SERVERNAME does not report such changes. @@SERVERNAME reports changes made to the local server name using the sp_addserver or sp_dropserver stored procedure.

To resolve the issue, Just follow below steps,

– To see Servername

sp_helpserver

– Removes server from the list of known servers on the local instance of SQL Server.

sp_dropserver ‘WRON_SERVER_NAME’, null

– Add server to the local instance of SQL Server.

sp_addserver ‘REAL_SERVER_NAME’,‘LOCAL’

Select @@SERVERNAME

Select SERVERPROPERTY(‘SERVERNAME’)

were returning different name, I got answer @ BOL as

@@SERVERNAME function and the SERVERNAME property of SERVERPROPERTY function may return strings with similar formats, the information can be different. The SERVERNAME property automatically reports changes in the network name of the computer.In contrast, @@SERVERNAME does not report such changes. @@SERVERNAME reports changes made to the local server name using the sp_addserver or sp_dropserver stored procedure.

To resolve the issue, Just follow below steps,

– To see Servername

sp_helpserver

– Removes server from the list of known servers on the local instance of SQL Server.

sp_dropserver ‘WRON_SERVER_NAME’, null

– Add server to the local instance of SQL Server.

sp_addserver ‘REAL_SERVER_NAME’,‘LOCAL’

SQL Server Upgrade Strategy

Posted: December 26, 2012 in Database AdministratorTags: In-Place Upgrade, Side by side upgrade, SQL Server upgradation from 2000 to 2008, SQL Server upgradation from 2005 to 2008, SQL Server Upgrade Strategy, Upgrading SQL Server

A

successful upgrade to SQL Server 2008 R2/2012 should be smooth and

trouble-free. To achieve that smooth transition, we must have to devote a

plan sufficiently for the upgrade, and match the complexity of database

application, otherwise, it risk costly and stressful errors and upgrade

problems. Like all IT projects, planning for every

Contingency/eventuality and then testing our plan gives us confidence

that will succeed. Any ignorance may increase the chances of running

into difficulties that can derail and delay upgrade.

Upgrade

scenarios will be as complex as our underlying applications and

instances of SQL Server. Some scenarios within environment might be

simple, other scenarios complex. Start to plan by analyzing upgrade

requirements, including reviewing upgrade strategies, understanding SQL

Server hardware and software requirements for specific version, and

discovering any blocking problems caused by backward-compatibility

issues.

There may be two Upgrade Scenarios as In-Place Upgrade and Side by side upgrade.

In-Place Upgrade : By using an in-place

upgrade strategy, the SQL Server 2008 R2 Setup program directly

replaces an instance of SQL Server 2000 or SQL Server 2005 with a new

instance of SQL Server 2008 R2 on the same x86 or x64 platform. This

kind of upgrade is called “in-place” because the upgraded instance of

SQL Server 2000 or SQL Server 2005 is actually replaced by the new

instance of SQL Server 2008 R2. You do not have to copy database-related

data from the older instance to SQL Server 2008 R2 because the old data

files are automatically converted to the new format. When the process

is complete, the old instance of SQL Server 2000 or SQL Server 2005 is

removed from the server, with only the backups that you retained being

able to restore it to its previous state.

Note:

If you want to upgrade just one database from a legacy instance of SQL

Server and not upgrade the other databases on the server, use the

side-by-side upgrade method instead of the in-place method.

Side by side upgrade : In a side-by-side upgrade, instead of directly replacing the older instance of SQL Server, required database and component data is transferred from an instance of SQL Server 2000 or SQL Server 2005 to a separate instance of SQL Server 2008 R2. It is called a “side-by-side” method because the new instance of SQL Server 2008 R2 runs alongside the legacy instance of SQL Server 2000 or SQL Server 2005, on the same server or on a different server.

There are two important options when you use the side-by-side upgrade method:

- You

can transfer data and components to an instance of SQL Server 2008 R2

that is located on a different physical server or on a different virtual

machine, or

- You can transfer data and components to an instance of SQL Server 2008 R2 on the same physical server

Both options let you run the new instance of SQL Server 2008 R2 alongside the legacy instance of SQL Server 2000 or SQL Server 2005. Typically, after the upgraded instance is accepted and moved into production, you can remove the older instance.

A

side-by-side upgrade to a new server offers the best of both worlds:

You can take advantage of a new and potentially more powerful server and

platform, but the legacy server remains as a fallback if you encounter a

problem. This method could also potentially reduce an upgrade downtime

by letting you have the new server and instances tested, up, and running

without affecting a current server and its workloads. You can test and

address hardware or software problems encountered in bringing the new

server online, without downtime of the legacy system. Although you would

have to find a way to export data out of the new system to go back to

the old system, rolling back to the legacy system would still be less

time-consuming than a full SQL Server reinstall and restoring the

databases, which a failed in-place upgrade would require. The downside

of a side-by-side upgrade is that increased manual interventions are

required, so it might take more preparation time by an

upgrade/operations team. However, the benefits of this degree of control

can frequently be worth the additional effort.

Source: SQL Server 2008 Upgrade Technical Reference Guide

Microsoft SQL Server 2012–SQL Server Data Tools (SSDT)

Posted: December 25, 2012 in Database AdministratorTags: Juneau, Microsoft SQL Server 2012–SQL Server Data Tools (SSDT), SQL Server Data Tools, SQL Server Developer Tools, SQL Server Juneau, SSDT

SQL Server 2012–SQL Server Data Tools is

available as a free component of the SQL Server platform and is

available for all SQL Server users. provides an integrated environment

for database developers to carry out all their database design work for

any SQL Server platform (both on and off premise) within Visual Studio.

Database developers can use the SQL Server Object Explorer in Visual

Studio to easily create or edit database objects and data, or execute

queries.

Developers will also appreciate the familiar VS tools we bring to database development, specifically; code navigation, IntelliSense, language support that parallels what is available for C# and VB, platform-specific validation, debugging and declarative editing in the TSQL Editor, as well as a visual Table Designer for both database projects and online database instances.

SQL Server Data Tools (SSDT) is the final name for the product formerly known as SQL Server Developer Tools, Code-Named “Juneau”. SSDT provides a modern database development experience for the SQL Server and SQL Azure Database Developer. As the supported SQL Azure development platform, SSDT will be regularly updated online to ensure that it keeps pace with the latest SQL Azure features.

Developers will also appreciate the familiar VS tools we bring to database development, specifically; code navigation, IntelliSense, language support that parallels what is available for C# and VB, platform-specific validation, debugging and declarative editing in the TSQL Editor, as well as a visual Table Designer for both database projects and online database instances.

SQL Server Data Tools (SSDT) is the final name for the product formerly known as SQL Server Developer Tools, Code-Named “Juneau”. SSDT provides a modern database development experience for the SQL Server and SQL Azure Database Developer. As the supported SQL Azure development platform, SSDT will be regularly updated online to ensure that it keeps pace with the latest SQL Azure features.

Free E-Book Gallery for Microsoft Technologies

Posted: December 24, 2012 in Database AdministratorTags: Download free ebooks, E-Book Gallery for Microsoft Technologies, ebook download, free e-books, free ebooks, Free Microsoft Books, Free MS books, free SQL server 2012 book download, MS books download, SQL Server 2012 ebooks

After so lots of google, I got a very nice link for a Large collection of Free Microsoft eBooks including: SharePoint, Visual Studio, Windows Phone, Windows 8, Office 365, Office 2010, SQL Server 2012, Azure, and more. Here is link

another link is http://social.technet.microsoft.com/wiki/contents/articles/11608.e-book-gallery-for-microsoft-technologies.aspx

Happy Reading! JJJ

Connectivity Error – A transport-level error has occurred when sending the request to the server. (provider: TCP Provider, error: 0 – An existing connection was forcibly closed by the remote host.)

Posted: December 24, 2012 in Database AdministratorTags: A transport-level error has occurred when sending the request to the server, An existing connection was forcibly closed by the remote host.), Connectivity Error, Msg 10054, SQL Server restared error

It’s a connectivity problem with a previously opened session in SQL Server, Sometimes a user can get SQL server error as

Msg 10054, Level 20, State 0, Line 0

A transport-level error has occurred when sending the request to the server. (provider: TCP Provider, error: 0 – An existing connection was forcibly closed by the remote host.)

Some possible causes are as below

Msg 10054, Level 20, State 0, Line 0

A transport-level error has occurred when sending the request to the server. (provider: TCP Provider, error: 0 – An existing connection was forcibly closed by the remote host.)

Some possible causes are as below

- The server has been restarted, this will close the existing connections.

- Someone has killed the SPID that is being used.

- Network Failure

- SQL Services restarted

SEQUENCE v/s IDENTITY

Posted: December 24, 2012 in Database AdministratorTags: Diff between SEQUENCE and IDENTITY, Difference between IDENTITY and SEQUENCE, Difference between SEQUENCE and IDENTITY, IDENTITY, SEQUENCE, Sequence Object, Sequence Object in SQL Server 2012, SEQUENCE v/s IDENTITY, SQL Server 2012 - SEQUESNCE

As we know Sequence is new enhanced feature introduced in SQL Server 2012. SEQUENCE

work similarly to an IDENTITY value, but where the IDENTITY value is

scoped to a specific column in a specific table, the Sequence Object is

scoped to the entire database and controlled by application code. This

can allow us to synchronize seed values across multiple tables that

reference one another in a parent child relationship. Or, with a little

bit of code we can also take control on whether or not the next value

is used or saved for the next INSERT should the current transaction be

rolled back where the IDENTITY value is lost and creates a gap when an

INSERT is rolled backed. Here are some key differences as

| Sequence |

Identity

|

| A SQL Server sequence object generates sequence of numbers just like an identity column in sql tables. But the advantage of sequence numbers is the sequence number object is not limited with single SQL table. | IDENTITY is a table specific. |

| The status of the sequence object can be viewed by querying the DMV sys.sequences as shown below. SELECT Name,start_value,minimum_value,maximum_value ,current_value

FROM

sys.sequences |

System Function @@IDENTITY

can use for the last-inserted identity value

|

| Sequence is an object. Example : CREATE SEQUENCE MySequesnceName AS INT START WITH 1 INCREMENT BY 1 MINVALUE 1 MAXVALUE 1000 NO CYCLE NO CACHE |

Identity is a property in a table.

Example :

CREATETABLE TblIdentityChk ( ID INT Identity (1,1), CUSTNAME Varchar(50) ) |

| You can obtain the new value before using it in an INSERT statement | You cannot obtain the new value in your application before using it |

| In the sequence, you do not need to insert new ID, you can view the new ID directly.

Example :

SELECT NEXT VALUE FOR MySequesnceName |

If you need a new ID from an identity column you need to

insert and then get new ID.

Example :

Insert into TblIdentityChk Values(‘TEST CUSTOMER’)

GO

SELECT @@IDENTITY AS ‘Identity’

–OR

Select SCOPE_IDENTITY() AS‘Identity’

|

| You can add or remove a default constraint defined for a column with an expression that generates a new sequence value (extension to the standard) | You cannot add or remove the property from an existing column |

| You can generate new values in an UPDATE statement, let see example as UPDATE TableName SET IDD = Next Values for MySequesnceName |

You cannot generate new values in an UPDATE statement when needed, rather only in INSERT statements |

| In the sequence, you can simply add one property to make it a cycle.

Example :

ALTER SEQUENCE MySequesnceName

CYCLE;

|

You cannot perform a cycle in identity column. Meaning, you cannot restart the counter after a particular interval. |

| The semantics of defining ordering in a multi-row insert are very clear using an OVER clause (extension to the standard), and are even allowed in SELECT INTO statements | The semantics of defining ordering in a multi-row insert are confusing, and in SELECT INTO statements are actually not guaranteed |

| Sequence can be easily cached by just setting cache property of sequence. It also improves the performance.

Example :

ALTER SEQUENCE [dbo].[Sequence_ID]

CACHE 3;

|

You cannot cache Identity column property. |

| The sequence is not table dependent so you can easily remove it

–Let Insert With Sequence object

INSERT INTO TblBooks([ID],[BookName])

VALUES (NEXT VALUE FOR MySequesnceName, ‘MICROSOFT SQL SERVER 2012′)

GO

-Now Insert Second value without Sequence object

INSERT INTO TblBooks([ID],[BookName])

VALUES (2, ‘MICROSOFT SQL SERVER 2012′)

GO

|

You cannot remove the identity column from the table directly. |

| You can define minimum and maximum values, whether to allow cycling, and a cache size option for performance

Example :

ALTER SEQUENCE MySequesnceName

MAXVALUE 2000;

|

You cannot define: minimum and maximum values, whether to allow cycling, and caching options |

| You can obtain a whole range of new sequence values in one shot using the stored procedure sp_sequence_get_range (extension to the standard), letting the application assign the individual values for increased performance | You cannot obtain a whole range of new identity values in one shot, letting the application assign the individual values |

| You can reseed as well as change the step size.

Example :

ALTER SEQUENCE MySequesnceName

RESTART WITH 7

INCREMENT BY 2;

|

You can reseed it but cannot change the step size.

Example :

DBCC CHECKIDENT (TblIdentityChk,RESEED, 4)

|

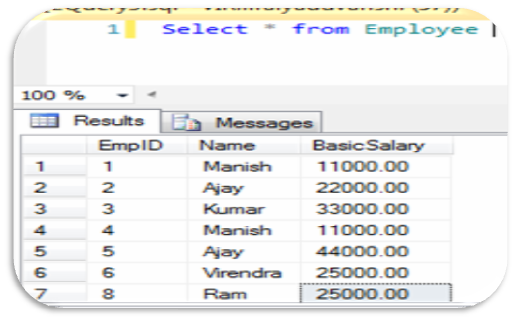

Row Numbering in SQL Select Query

Posted: December 24, 2012 in Database AdministratorTags: Cannot add identity column using the SELECT INTO statement, inherits the identity property, Msg 177, Msg 8108, Row Numbers in SQL Query, Row Numbers in SQL Select Query, SELECT statement with auto generate row id, SQL Server, The IDENTITY function can only be used when the SELECT statement has an INTO clause, Using Identity, Using IDENTITY in a SELECT

Some

time we have to do Row Numbering, for same we can use IDENTTY function

with SELECT Statement, but we can’t use it with simply SELECT command,

its always used with … INTO .. form as

SELECT Col1,Col2…..Coln INTO TableName from SourceTablename

Will give error as

Msg 177, Level 15, State 1, Line 1

The IDENTITY function can only be used when the SELECT statement has an INTO clause.

SELECT * from #TempEmp

The IDENTITY gets three mandatory parameters, namely datatype of the identity column, starting value and the increment. With these, it can customize the new column according to our requirement. For example, an integer starting from 1 and incremented for each row with 1 can be specified as:

IDENTITY(INT,1,1)

Select * from #TempEmp

Will throw error as

Msg 8108, Level 16, State 1, Line 1

Cannot add identity column, using the SELECT INTO statement, to table ‘#TempEmp’, which already has column ‘EMPID’ that inherits the identity property.

SELECT Col1,Col2…..Coln INTO TableName from SourceTablename

If it tried with simply select command as

Select identity(int,1,1) IDD, EMPNAME,DEPT,BASICSALARY from EmployeeWill give error as

Msg 177, Level 15, State 1, Line 1

The IDENTITY function can only be used when the SELECT statement has an INTO clause.

So if we want to do it, we have to pass Select statement as

Select IDENTITY(int,1,1) IDD, NAME,BASICSALARY into #TempEmp from EmployeeSELECT * from #TempEmp

The IDENTITY gets three mandatory parameters, namely datatype of the identity column, starting value and the increment. With these, it can customize the new column according to our requirement. For example, an integer starting from 1 and incremented for each row with 1 can be specified as:

IDENTITY(INT,1,1)

Note

: Suppose in source table there is already a Identity column exist,

this column should be not in Select statement otherwise it will give a

error, let in Employee Table, EMPID is an IDENTITY column, and we are

passing Select statement as

Select IDENTITY(int,1,1) IDD, EMPID,NAME,BASICSALARY into #TempEmp from EmployeeSelect * from #TempEmp

Will throw error as

Msg 8108, Level 16, State 1, Line 1

Cannot add identity column, using the SELECT INTO statement, to table ‘#TempEmp’, which already has column ‘EMPID’ that inherits the identity property.

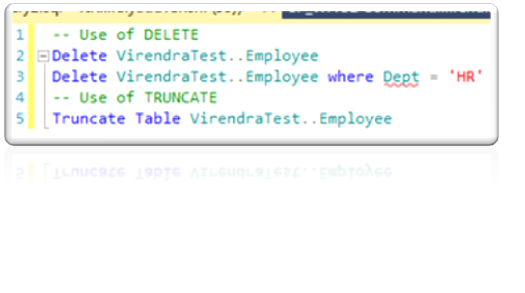

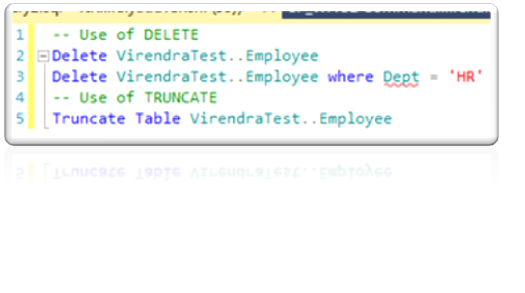

Ignoring all Constraints

Posted: December 24, 2012 in Database AdministratorTags: Bulk Insert, Bulk operation ignoring Constraints, Bulk Update ignoring trigger, Disable All Constraints, Disable Constraints with INSERT and UPDATE Statements, Disable Foreign, Disable Foreign Key Constraints with INSERT and UPDATE Statements, Ignoring all Constraints, Ignoring Trigger

Some

time its required we have to INSERT/UPDATE some bulk data, and due to

particular Constraints, it work as a barrier to prevent as per

constrains behavior. We can set the constraints on a perticular table /

column to not check temporarily, then re-enable the constraints as

ALTER TABLE TableName NOCHECK CONSTRAINT ConstraintName

Then re-enable constraints using-

ALTER TABLE TableName CHECK CONSTRAINT ConstraintName

If its required to Disable all constraints from all of Tables of Current Database, for same we can use

ALTER TABLE TableName NOCHECK CONSTRAINT ConstraintName

Then re-enable constraints using-

ALTER TABLE TableName CHECK CONSTRAINT ConstraintName

If its required to Disable all constraints from all of Tables of Current Database, for same we can use

–Disable all Constraints

EXEC sp_MSforeachtable ‘ALTER TABLE ? NOCHECK CONSTRAINT ALL’

–Enable all Constraints

EXEC sp_MSforeachtable ‘ALTER TABLE ? CHECK CONSTRAINT ALL’

EXEC sp_MSforeachtable ‘ALTER TABLE ? NOCHECK CONSTRAINT ALL’

–Enable all Constraints

EXEC sp_MSforeachtable ‘ALTER TABLE ? CHECK CONSTRAINT ALL’

Best Practices for Bulk Importing Data

Posted: December 24, 2012 in Database AdministratorTags: Best Practices, Best Practices for Bulk Importing Data, Bulk Import, Bulk Import Data, IGNORE_TRIGGERS, INSERT INTO, Insert Perfomance, KEEPDEFAULTS, KEEPIDENTITY, TABLOCK, Using OPENROWSET, Using OPENROWSET and BULK to Bulk Import Data

- Using INSERT INTO…SELECT to Bulk Import Data with Minimal Logging

INSERT INTO <target_table> SELECT <columns> FROM

<source_table> is the best way to efficiently transfer a large

number of rows from one table, such as a staging table, to another table

with minimal logging. Minimal logging can improve the performance and

will fill the minimum amount of available transaction log space during

the transaction. Minimal logging for this statement has the following

requirements:

• The recovery model of the database is set to simple or bulk-logged.

• The target table is an empty or nonempty heap.

• The target table is not used in replication.

• The TABLOCK hint is specified for the target table.

• The recovery model of the database is set to simple or bulk-logged.

• The target table is an empty or nonempty heap.

• The target table is not used in replication.

• The TABLOCK hint is specified for the target table.

Rows that are inserted into a heap as the

result of an insert action in a MERGE statement may also be minimally

logged. Unlike the BULK INSERT statement, which holds a less restrictive

Bulk Update lock, INSERT INTO…SELECT with the TABLOCK hint holds an

exclusive (X) lock on the table. This means that we cannot insert rows

using parallel insert operations.

- Using OPENROWSET and BULK to Bulk Import Data

The OPENROWSET function can accept the following table hints, which provide bulk-load optimizations with the INSERT statement:

• The TABLOCK hint can minimize the number of log records for the insert operation. The recovery model of the database must be

set to simple or bulk-logged and the target table cannot be used in replication.

•The IGNORE_CONSTRAINTS hint can temporarily disable FOREIGN KEY and CHECK constraint checking.

• The IGNORE_TRIGGERS hint can temporarily disable trigger execution.

• The KEEPDEFAULTS hint allows the insertion of a table column’s default value, if any, instead of NULL when the data record

lacks a value for the column.

• The KEEPIDENTITY hint allows the identity values in the imported data file to be used for the identity column in the target table.

• The TABLOCK hint can minimize the number of log records for the insert operation. The recovery model of the database must be

set to simple or bulk-logged and the target table cannot be used in replication.

•The IGNORE_CONSTRAINTS hint can temporarily disable FOREIGN KEY and CHECK constraint checking.

• The IGNORE_TRIGGERS hint can temporarily disable trigger execution.

• The KEEPDEFAULTS hint allows the insertion of a table column’s default value, if any, instead of NULL when the data record

lacks a value for the column.

• The KEEPIDENTITY hint allows the identity values in the imported data file to be used for the identity column in the target table.

Discontinued Database Engine Functionality in SQL Server 2012

Posted: December 23, 2012 in Database AdministratorTags: 80 compatibility levels, Discontinued Database Engine Functionality in SQL Server 2012, FASTFIRSTROW, Feature not in SQL 2012, Functionality not in SQL Server 2012, PWDCOMPARE, SP_Configure, sp_dboption, sp_dropalias, VIA Protocol

Here are Database Engine features that are no longer available in SQL Server 2012.

| Category |

Discontinued feature

|

Replacement

|

| Backup and Restore |

BACKUP { DATABASE | LOG } WITH PASSWORD and BACKUP { DATABASE | LOG } WITH MEDIAPASSWORD are discontinued. RESTORE { DATABASE | LOG } WITH [MEDIA]PASSWORD continues to be deprecated.

|

None

|

| Backup and Restore |

RESTORE { DATABASE | LOG } … WITH DBO_ONLY

|

RESTORE { DATABASE | LOG } … … WITH RESTRICTED_USER

|

| Compatibility level |

80 compatibility levels

|

Databases must be set to at least compatibility level 90.

|

| Configuration Options |

sp_configure ‘user instance timeout’ and ‘user instances enabled’

|

Use the Local Database feature. For more information, see SqlLocalDB Utility

|

| Connection protocols |

Support for the VIA protocol is discontinued.

|

Use TCP instead.

|

| Database objects |

WITH APPEND clause on triggers

|

Re-create the whole trigger.

|

| Database options |

sp_dboption

|

ALTER DATABASE

|

|

SQL Mail

|

Use Database Mail. For more information, see Database Mail and Use Database Mail Instead of SQL Mail.

|

|

| Memory Management |

32-bit Address Windowing Extensions (AWE) and 32-bit Hot Add memory support.

|

Use a 64-bit operating system.

|

| Metadata |

DATABASEPROPERTY

|

DATABASEPROPERTYEX

|

| Programmability |

SQL Server Distributed Management Objects (SQL-DMO)

|

SQL Server Management Objects (SMO)

|

| Query hints |

FASTFIRSTROW hint

|

OPTION (FAST n).

|

| Remote servers |

The ability for users to create new remote servers by using sp_addserver is discontinued. sp_addserver with the ‘local’ option remains available. Remote servers preserved during upgrade or created by replication can be used.

|

Replace remote servers by using linked servers.

|

| Security |

sp_dropalias

|

Replace aliases with a combination of user accounts and database roles. Use sp_dropalias to remove aliases in upgraded databases.

|

| Security |

The version parameter of PWDCOMPARE representing a value from a login earlier than SQL Server 2000 is discontinued.

|

None

|

| Service Broker programmability in SMO |

The Microsoft.SqlServer.Management.Smo.Broker.BrokerPriority class no longer implements theMicrosoft.SqlServer.Management.Smo.IObjectPermission interface.

|

|

| SET options |

SET DISABLE_DEF_CNST_CHK

|

None.

|

| System tables |

sys.database_principal_aliases

|

Use roles instead of aliases.

|

| Transact-SQL |

RAISERROR in the format RAISERROR integer ‘string’ is discontinued.

|

Rewrite the statement using the current RAISERROR(…) syntax.

|

| Transact-SQL syntax |

COMPUTE / COMPUTE BY

|

Use ROLLUP

|

| Transact-SQL syntax |

Use of *= and =*

|

Use ANSI join syntax. For more information, see FROM (Transact-SQL).

|

| XEvents |

databases_data_file_size_changed, databases_log_file_size_changed

eventdatabases_log_file_used_size_changed

locks_lock_timeouts_greater_than_0

locks_lock_timeouts

|

Replaced by database_file_size_change event, database_file_size_change

database_file_size_change event

lock_timeout_greater_than_0

lock_timeout

|

SQL Server Management Studio 2012 – Enhanced Capabilities

Posted: December 20, 2012 in Database AdministratorTags: Code Sippsets, Enhancement, Intellisense, Intellisense Enhancement, SQL Management Studio 2012, SQL Management Studio 2012 – Enhanced Capabilities, SQL Server Management Studio 2012, SQL Server Management Studio 2012 – Enhanced Capabilities, SQL Server Management Studio 2012 new features, SQL Server SSMS 2012, SSMS 2012, SSMS new features

Here are some new SSMS 2012 features as

Its showing all objects which contain “TABLE”.

2) At the bottom left corner of the query window, notice there is a Zoom window that displays 100%, change this to 150%. The enhancement to be able to zoom in the query window enables you to read queries more accurately. Its for results message windows too.

3)

Code Snippets are an enhancements to the Template Feature that was

introduced in SQL Server Management Studio. You can also create and

insert your own code snippets, or Import them from a pre-created snippet

library.

3)

Code Snippets are an enhancements to the Template Feature that was

introduced in SQL Server Management Studio. You can also create and

insert your own code snippets, or Import them from a pre-created snippet

library.

- Exploring Intellisense Enhancement – Enhanced

Intellisense will now provide partial syntax completion, while in

previous versions Intellisense would only display the objects that start

with the syntax you started typing.

Let see example

Its showing all objects which contain “TABLE”.

2) At the bottom left corner of the query window, notice there is a Zoom window that displays 100%, change this to 150%. The enhancement to be able to zoom in the query window enables you to read queries more accurately. Its for results message windows too.

3)

Code Snippets are an enhancements to the Template Feature that was

introduced in SQL Server Management Studio. You can also create and

insert your own code snippets, or Import them from a pre-created snippet

library.

3)

Code Snippets are an enhancements to the Template Feature that was

introduced in SQL Server Management Studio. You can also create and

insert your own code snippets, or Import them from a pre-created snippet

library.Differences between SQL Server 2008 and 2012

Posted: December 19, 2012 in Database AdministratorTags: Diff Between SQL Server 2008 and 2012, Difference between SQL 2008 abd 2012, Differences between SQL Server 2008 and SQL Server 2012, SQL Server 2012, sql server 2012 new features, SQL Server new features

Hi, Here are some Differences between SQL Server 2008/R2 and 2012.

Other more precious features will be added with this document very soon.

J

| Sr. No. |

SQL Server 2008 |

SQL Server 2012 |

| 1 | Exceptions handle using TRY….CATCH | Unique Exceptions handling with THROW |

| 2 | High Availability features as Log Shipping, Replication, Mirroring & Clustering | New Feature ALWAYS ON introduced with addition of 2008 features. |

| 3 | Web Development and Business Intelligence Enhanced with business intelligence features. Excel PowerPivot by adding more drill and KPI through. | In Addition with SQL server 2008, Web Development and Business Intelligence Enhanced with business intelligence features and Excel PowerPivot by adding more drill and KPI’s. |

| 4 | Could not supported for Windows Server Core Support. | Supported for Windows Server Core Support |

| 5 | Columnstore Indexes not supported. | New Columnstore Indexes feature that is completely unique to SQL Server. They are special type of read-only index designed to be use with Data Warehouse queries. Basically, data is grouped and stored in a flat, compressed column index, greatly reducing I/O and memory utilization on large queries. |

| 6 | PowerShell Supported | Enhanced PowerShell Supported |

| 7 | Distributed replay features not available. | Distributed replay allows you to capture a workload on a production server, and replay it on another machine. This way changes in underlying schemas, support packs, or hardware changes can be tested under production conditions. |

| 8 | PowerView not available in BI features | PowerView is a fairly powerful self-service BI toolkit that allows users to create mash ups of BI reports from all over the Enterprise. |

| 9 | EXECUTE … with RECOMPLIE feature | Enhanced EXECUTE with many option like WITH RESULT SET…. |

| 10 | Maximum numbers of concurrent connections to SQL Server 2008 is 32767 | SQL server 2012 has unlimited concurrent connections. |

| 11 | The SQL Server 2008 uses 27 bit bit precision for spatial calculations. |

The SQL Server 2012 uses 48 bit precision for spatial calculations |

| 12 | TRY_CONVERT() and FORMAT() functions are not available in SQL Server 2008 |

TRY_CONVERT() and FORMAT() functions are newly included in SQL Server 2012 |

| 13 | ORDER BY Clause does not have OFFSET / FETCH options for paging | ORDER BY Clause have OFFSET / FETCH options for paging |

| 14 | SQL Server 2008 is code named as Katmai. | SQL Server 2012 is code named as Denali |

| 15 | In SQL Server 2008, audit is an Enterprise-only feature. Only available in Enterprise, Evaluation, and Developer Edition. | In SQL Server 2012,support for server auditing is expanded to include all editions of SQL Server. |

| 16 | Sequence is not available in SQL Server 2008 |

Sequence is included in SQL Server 2012.Sequence is a user defined object that generates a sequence of a number |

| 17 | The Full Text Search in SQL Server 2008 does not allow us to search and index data stored in extended properties or metadata. | The

Full Text Search in SQL Server 2012 has been enhanced by allowing us to

search and index data stored in extended properties or metadata.

Consider a PDF document that has “properties” filled in like Name, Type,

Folder path, Size, Date Created, etc. In the newest release of SQL

Server, this data could be indexes and searched along with the data in

the document itself. The data does have to be exposed to work, but it’s

possible now. |

| 18 | Analysis Services in SQL Server does not have BI Semantic Model |

Analysis Services will include a new BI Semantic Model (BISM). BISM is a (BISM) concept. 3-layer model that includes: Data Model, Business Logic and Data Access |

| 19 | The BCP not support –K Option. | The BCP Utility and SQLCMD Utility utilities enhanced with -K option, which allows to specify read-only access to a secondary replica in an AlwaysOn availability group. |

| 20 | sys.dm_exec_query_stats | sys.dm_exec_query_stats added four columns to help troubleshoot long running queries. You can use the total_rows, min_rows, max_rows and last_rows aggregate row count columns to separate queries that are returning a large number of rows from problematic queries that may be missing an index or have a bad query plan. |

J

DATA DICTIONARY

Posted: December 19, 2012 in Database AdministratorTags: Creating Data dictionary, Creating Data Dictionary in SQL Server, Data Dictionary, Documentation, Extended Property, Sp_Addextendedproperty, Sql, SQL Server

As a DBA we have to maintain our all databases Dictionaries, Here

is a Script , from which we can generate a full view of Tables

structure details.

SELECT a.Name [Table Name],

b.name [Column Name],

c.name [Data Type],

b.length [Column Length],

b.isnullable [Allow Nulls],

CASE WHEN d.name is null THEN 0 ELSE 1 END [Primary Key],

CASE WHEN e.parent_object_id is null THEN 0 ELSE 1 END [ForiegnKey],

CASE WHEN e.parent_object_id is null

THEN ‘-’ ELSE g.name END [Reference Table],

CASE WHEN h.value is null THEN ‘-’ ELSE h.value END [Description]

from sysobjects as a

join syscolumns as b on a.id = b.id

join systypes as c on b.xtype = c.xtype

left join (SELECT so.id,sc.colid,sc.name FROM syscolumns sc

JOIN sysobjects so ON o.id = sc.id

JOIN sysindexkeys si ON so.id = si.id and sc.colid = si.colid

WHERE si.indid = 1) d on a.id = d.id and b.colid = d.colid

Left join sys.foreign_key_columns as e on a.id = e.parent_object_id

and b.colid = e.parent_column_id

left join sys.objects as g on e.referenced_object_id = g.object_id

left join sys.extended_properties as h on a.id = h.major_id

and b.colid = h.minor_id

where a.type = ‘U’

order by a.name

We can also get somehow details, not as above , using below

SELECT * FROM INFORMATION_SCHEMA.COLUMNS

JJJ

SELECT a.Name [Table Name],

b.name [Column Name],

c.name [Data Type],

b.length [Column Length],

b.isnullable [Allow Nulls],

CASE WHEN d.name is null THEN 0 ELSE 1 END [Primary Key],

CASE WHEN e.parent_object_id is null THEN 0 ELSE 1 END [ForiegnKey],

CASE WHEN e.parent_object_id is null

THEN ‘-’ ELSE g.name END [Reference Table],

CASE WHEN h.value is null THEN ‘-’ ELSE h.value END [Description]

from sysobjects as a

join syscolumns as b on a.id = b.id

join systypes as c on b.xtype = c.xtype

left join (SELECT so.id,sc.colid,sc.name FROM syscolumns sc

JOIN sysobjects so ON o.id = sc.id

JOIN sysindexkeys si ON so.id = si.id and sc.colid = si.colid

WHERE si.indid = 1) d on a.id = d.id and b.colid = d.colid

Left join sys.foreign_key_columns as e on a.id = e.parent_object_id

and b.colid = e.parent_column_id

left join sys.objects as g on e.referenced_object_id = g.object_id

left join sys.extended_properties as h on a.id = h.major_id

and b.colid = h.minor_id

where a.type = ‘U’

order by a.name

We can also get somehow details, not as above , using below

SELECT * FROM INFORMATION_SCHEMA.COLUMNS

JJJ

Writing Efficient Queries

Posted: December 18, 2012 in Database AdministratorTags: How to write optimise query, Query - Best Practices, Query tips, SQL Server, Wriiting Query in SQL Server, Writing Efficient Queries

Here are initial tips for writing efficient/ cost-effective Queries

- When using AND, put the condition least likely to be true first. The database system evaluates conditions from left to right, subject to operator precedence. If you have two or more AND operators

in a condition, the one to the left is evaluated first, and if and only

if it’s true is the next condition evaluated. Finally, if that

condition is true, then the third condition is evaluated. You can save

the database system work, and hence increase speed, by putting the least

likely condition first. For example, if you were looking for all

members living in Delhi and born before January 1, 1960, you could write

the following query:

SELECT FirstName, LastName FROM EMPLOYEE WHERE State = ‘Delhi’ AND DateOfBirth < ’1960-01-01′

The query would work fine; however, the number of members born before that date is very small, whereas plenty of people live in New State. This means that State = Delhi will occur a number of times and the database system will go on to check the second condition, DateOfBirth < ’1960-01-01′. If you swap the conditions around, the least likely condition (DateOfBirth < ’1960-01-01′) is evaluated first:

SELECT FirstName, LastName FROM MemberDetails WHERE

DateOfBirth < ’1960-01-01′ AND State = ‘Delhi’;

Because the condition is mostly going to be false, the second condition will rarely be executed, which saves time. It’s not a big deal when there are few records, but it is when there are a lot of them.

- When using OR, put the condition most likely to be true first. Whereas AND needs both sides to be true for the overall condition to be true, OR needs only one side to be true. If the left-hand side is true, there’s no need for OR to

check the other condition, so you can save time by putting the most

likely condition first. Consider the following statement:

SELECT FirstName, LastName FROM MemberDetails WHERE State

= ‘Delhi’ OR DateOfBirth < ’1960-01-01′;

If Delhi is true, and it is true more often than DateOfBirth < ’1960-01-01′ is true, then there’s no need for the database system to evaluate the other condition, thus saving time.

-

DISTINCT can be faster than GROUP BY. DISTINCT and GROUP BY often do the same thing: limit results to unique rows. However,DISTINCT is often faster with some database systems than GROUP BY. For example, examine the following GROUP BY:SELECT MemberId FROM Orders GROUP BY MemberId;

The GROUP BY could be rewritten using the DISTINCT keyword:

SELECT DISTINCT MemberId FROM Orders; -

Use IN with your subqueries. When you write a query similar to the following, the database system has to get all the results from the subquery to make sure that it returns only one value,SELECT FirstName, LastName FROM EMPLOYEE WHERE EMPID IN (SELECT EMPID FROM Orders WHERE OrderId = 2);

SELECT FirstName, LastName FROM EMPLOYEE WHERE

EMPID = (SELECT EMPID FROM Orders WHERE OrderId = 2);

If you rewrite the query using the IN operator, the database system only needs to get results until there’s a match with the values returned by the subquery; it doesn’t necessarily have to get all the values:

- Avoid using SELECT * FROM.

Specifying which columns you need has a few advantages, not all of them

about efficiency. First, it makes clear which columns you’re actually

using. If you use SELECT * and

actually use only two out of seven of the columns, it’s hard to guess

from the SQL alone which ones you’re using. If you say SELECT FirstName, LastName…..then

it’s quite obvious which columns you’re using. From an efficiency

standpoint, specifying columns reduces the amount of data that has to

pass between the database and the application connecting to the

database. This is especially important where the database is connected

over a network.

- Search on integer columns. If you have a choice, and often you don’t, search on integer columns. For example, if you are looking for the member whose name is VIRENDRA YADUVANSHI and whose MemberId is 101, then it makes sense to search via the MemberId because it’s much faster.

Deprecated Database Engine Features in SQL Server 2012

Posted: December 16, 2012 in Database AdministratorTags: Commands not suggested to use for future coding, Deprecated commands SQL Server 2012, Deprecated Database Engine Features in SQL Server 2012, Discontinued Commands

Here

is the complete list of Deprecated Database Engine Features in SQL

Server 2012. This topic describes the deprecated SQL Server Database

Engine features that are still available in SQL Server 2012. These

features are scheduled to be removed in a future release of SQL Server.

Deprecated features should not be used in new applications.

For more please visit http://msdn.microsoft.com/en-us/library/ms143729.aspx

For more please visit http://msdn.microsoft.com/en-us/library/ms143729.aspx

DC-DR Log Shipping Drill Down

Posted: December 14, 2012 in Database AdministratorTags: DC-DR Log Shipping Drill Down, Log shipping - Failure of Primary Server, Log Shipping DC DR Drill, Log Shipping Failure, LOG Shipping swapping PRIMARY and SECONDARY Server, SQL Server Log Shipping Failure

Its sometime happened, our DC (Main

Primary DB Instance) may be down due to some reasion and we have to

start our log shipped Secondary Server which is at DR location, needs to

act as Primary Server for smooth Production.

In my Example lets Primary Server is

named as ‘PRIMARY’ and DB which is used for Log shipping at server

‘SECONDARY’ is VIRENDRATEST.

Now suppose I would like to change my

PRIMARY Server’s production environment as a ‘SECONDARY’ and ‘SECONDARY’

as a PRIMARY. Below are the steps for same.

- First be sure, all applications hits are stopped to DB server. For same we have to contact Application Team/Production to STOP/DISABLE the services/Application, After confirmation from there let go ahead.

- Take Full BACKUP of PRIMARY Server’s DB.

- Run LS-Backup Job at Primary Server and Disable it.

- Run LS-Copy Job at SECONDARY Server , after complition of this, disable this job.

- Run LS-Restore job at Secondary Server and after completion of it, Disable it

- Now Take LOG Backup from PRIMARY server as using command as

BACKUP LOG VIRENDRATEST TO Disk=N’D:\LS_BACKUP\VIRENDRATESTLOG.BAK’ WITH NORECOVERY - Copy LOG Backup file(of Step 6) of PRIMARY at SCECONDARY server manually.

- At SECONDARY , restores last BACKUP LOG file with option WITH RECOVERY

- Now our SECONDARY Server’s DB is up for production and PRIMARY Server’s DB is in RESTORING mode

- Now Configure LOG SHIPPING again at SECONDARY as PRIMARY and PRIMARY as SECONDARY.

- Run LS-Backup Job at newly PRIMARY server.

- Refresh both servers instance, both server should be in sysn order with proper status.

- Check the Logshipping where its working properly or not

- Inform to Production team to Start/Enable all Application/Services

SSMS Boost add-in

Posted: December 12, 2012 in Database AdministratorTags: boostup SSMS, SSMS add-in, SSMS addin, SSMS Boost add-in, SSMS features, Working with SQL Server in SSMS

I found a very powerful SSMS add-in as SSMSBoost.

“SSMS Boost add-in” is designed to improve productivity when working with Microsoft SQL Server in SQL Server Management Studio. The main goal of project is to speed-up daily tasks of SQL DBA and T-SQL developers. Please visit http://www.ssmsboost.com/ for more details and download and enjoy!